Summary

Hypothesis Planned Exploration (HyPE) is a novel approach to exploration in meta-reinforcement learning. Given the same sample budget, our method successfully adapts four times more often than the standard passive strategy. I’ve contributed heavily to experimental design, test coverage (via Pytest), and training + evaluation infrastructure. We are currently under review for publication at IJCAI 2026. Additional work is ongoing with plans to submit to NeurIPS 2026.

Summary

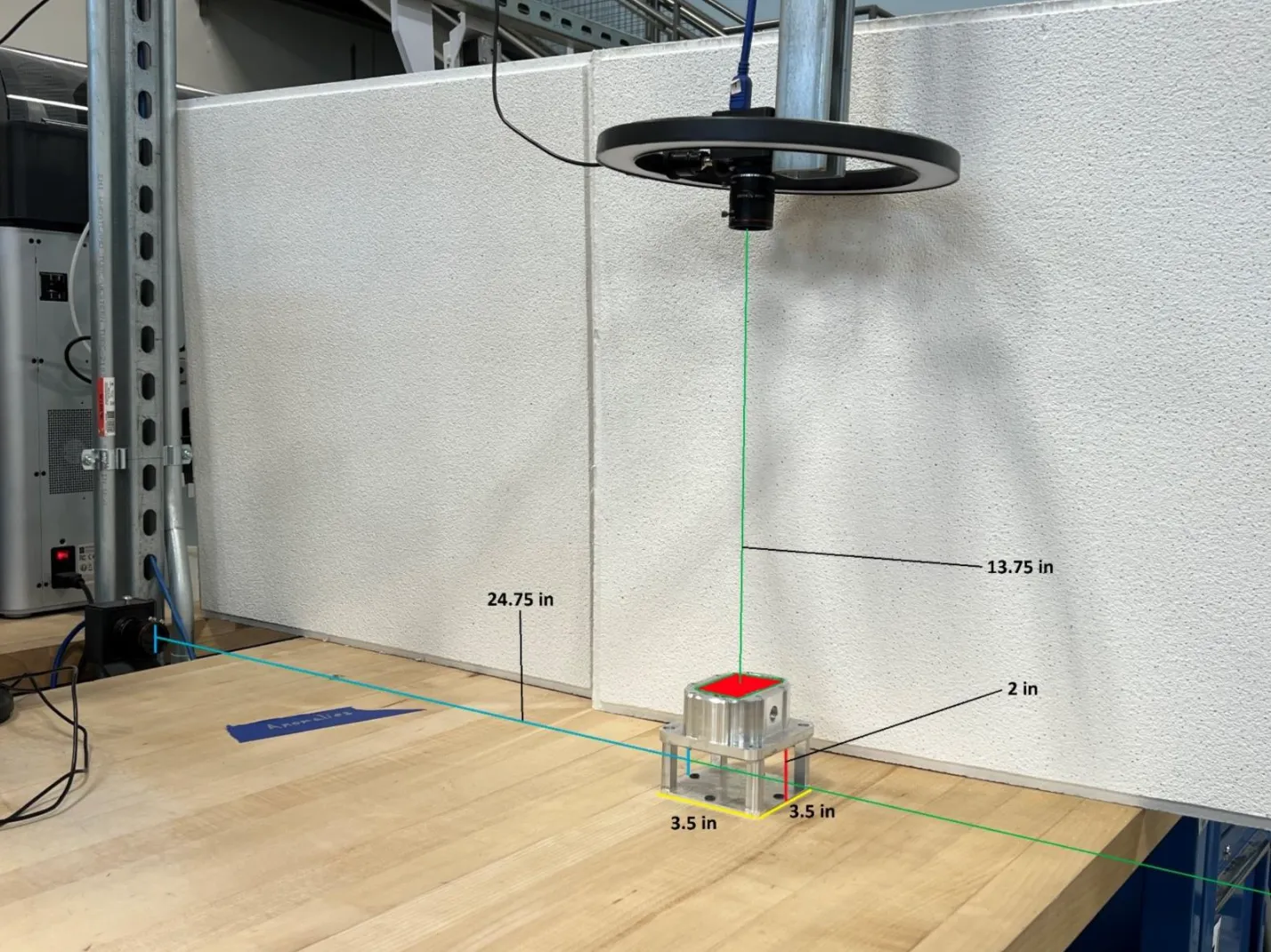

At Purdue’s Digital Enterprise Center, my research team was dedicated to developing a manufacturing workflow which integrates IoT tools, computer vision, and Solumina MES to streamline the assembly process. To showcase this workflow, we assembled a commercial oil pump over three workstations that would each be responsible for a subset of the assembly steps. As a part of this project, I built software to perform computer-vision-aided foreign debris detection, assembly verification, and comprehensive data collection.

Notable Work

- For a faster training pipeline, I leveraged image augmentation to increase our dataset by up to 64 times, allowing us to efficiently add new objects of interest to our set of foreign objects. I also streamlined the development and new-hire integration process by creating a CI/CD pipeline using the pdoc library alongside GitHub Workflow, enabling automated building and deployment of a documentation page for the data wrangling and model training scripts.

- Using Rust, Tauri, and Svelte, I developed a desktop application prototype for multi-camera selection and real-time object detection. Starting with example object detection code from the official YOLOv8 repository, I improved performance 5x by parallelizing pre and post-processing using Rayon.

- Re-wrote legacy Python code, correcting major issues like unblocked concurrent file access. Replaced inefficient system calls that launched new command-line processes with lightweight threading for improved performance and resource management.

- Currently working on integrating the computer vision pipeline with Atlas Copco’s PF6000 controller for real-time feedback on the assembly process. This will allow us to detect and correct errors in real-time, reducing the need for manual inspection and rework. Assembly data is stored in Solumina MES via its native REST API for enhanced traceability and analysis.